Beyond Handwriting: Speculating on what is next for Ctrl Labs' Wristband Neural Interface

Published on April 02, 2024

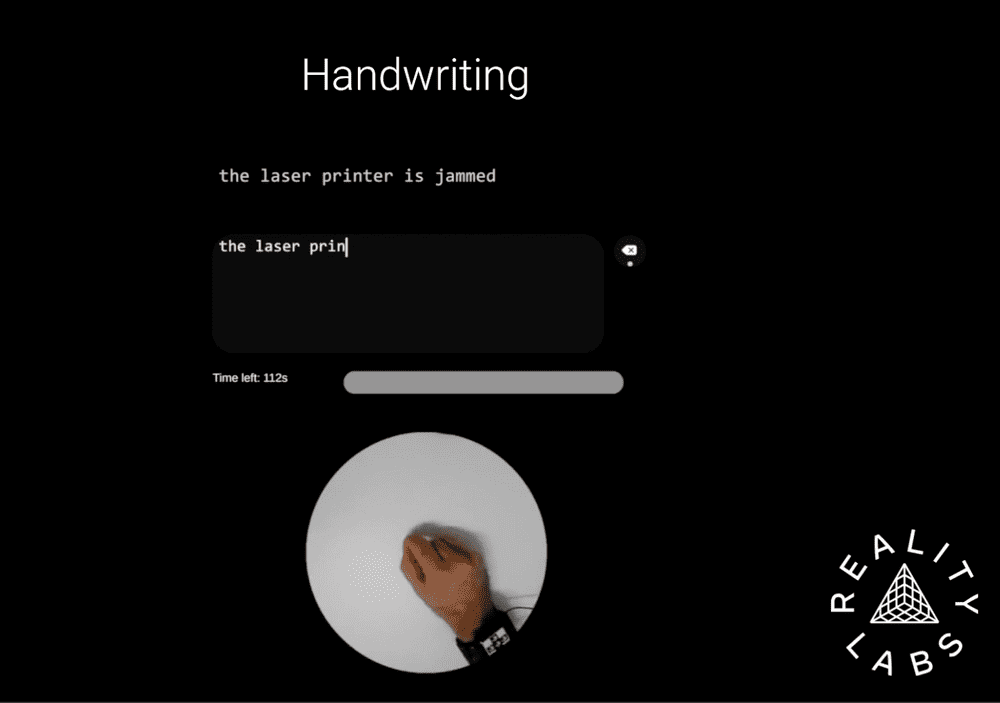

Ctrl Labs within Meta’s Reality Labs division released a paper about their incredible progress on a generalizable wristband-based generalizable neural interface. The choice of a handwriting demo task over the previously teased typing task is curious, and unless there is more coming soon, there is still a lot of work to be done before they can launch it as a product.

Soon after Mark Zuckerberg alluded to a neural interface that was was ‘... actually kind of close to…a product in the, in the next few years…’ on the Morning Brew show, the Ctrl Labs team at Meta’s Reality Labs released a research paper about their sEMG wristband.

The paper describes how they used data from thousands of participants to train models that allow their wristband prototype (or research device) to work out of the box for new users — a first in the neural interface field. Their research is robust and reported in detail, describing how the team

- Combined their multielectrode sEMG bracelet hardware with a scalable data collection infrastructure

- Used this setup to this to collect data from 1000s of participants for wrist movements, gestures like thumb and finger taps, pinches and swipes, and handwriting tasks

- Developed models that achieved close to 90% classification accuracy for held-out participants on gesture detection and handwritten character recognition

Still from a supplementary video uploaded with the paper

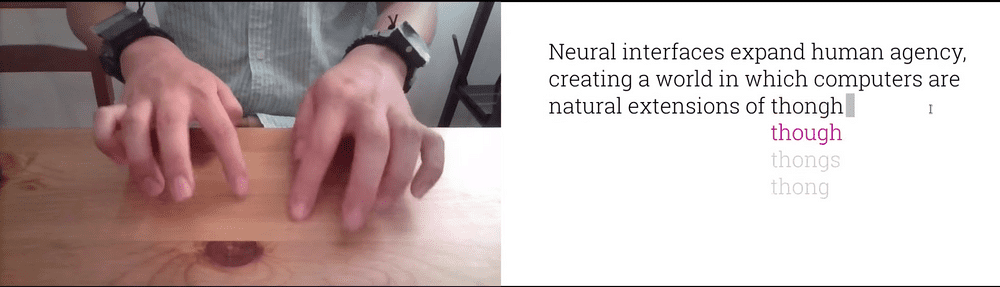

Still from a supplementary video uploaded with the paper

They showed that:

- Training on a large enough dataset allows you to build generalizable models for wrist sEMG (although the dataset itself isn't available).

- Model personalization further improves performance and reduces latency.

The 3-page list of contributors indicates how much effort has gone into this since Ctrl Labs' acquisition in 2019. The demonstration of a neural interface that works without calibration is certainly noteworthy for the BCI world. However, there is still a significant gap between this paper and some earlier demos and teasers. In particular, their choice of a handwriting task, which achieves at best less than half the speed of typing, raises more questions.

I'd love to understand why they didn't pick the typing task they had previously shown in a video. In the paper, the authors stress that their ground truth was approximate and relied on prompts and inferred timing. A typing task — perhaps with a touch keyboard — would have provided them with true ground truth that was more scalable. Typing would also provide much more open space for adaptive learning, envisioned in Meta’s March 2021 post, “...imagine instead a virtual keyboard that learns and adapts to your unique typing style (typos and all) over time..”.

Still from an ealier video with a virtual keyboard

Still from an ealier video with a virtual keyboard

Other areas that Meta had discussed earlier, but this paper didn't touch on, were

- barely perceptible controls or the use of much subtler non-perceptible movements (accessible neuromotor information that isn't being utilized),

- intention and co-adaptive learning

- using language models to correct human text.

Meta has previously demonstrated and discussed these, with evidence that they have research devices and models capable of performing these tasks. Hopefully this recent paper is just a start, and there's more coming soon.

If you like it, share it!